In brief - What are the considerations for schools in promoting safe and responsible use of artificial intelligence technology? This article will provide some guidance in preparing your framework for the use of generative AI in schools.

Background

Since ChatGPT's release in late 2022, Australian schools have moved to ban the use of Artificial Intelligence (AI) tools due to concerns of plagiarism and adverse learning outcomes. Generative AI technology, such as ChatGPT, is considered to have the potential to revolutionise society, much like the introduction of the internet. The Educational Minister has now backflipped on the initial stance and is supporting a national framework guiding the use of AI technologies in schools from 2024. The education sector must now be proactive in their approach to support and educate students on the responsible use of AI in the classroom.

Regulatory landscape

In August 2023, Education Services Australia (ESA) released the framework "AI in Australian Education Snapshot: Principles, Policy and Practice" (the ESA Framework) which details the current landscape of policy globally and recommends guiding principles to ensure that AI used in classrooms is safe, impactful and measurable.

In Australia, AI is not yet independently regulated. However, there are a number of legal obligations which should be considered when developing your framework to utilise AI in the classrooms, including:

-

Privacy considerations - Generative AI actively learns from the information entered into it and the information available online. This presents a risk to schools in the event of unauthorised disclosure of personal information. Schools should consider using a platform which has been built and designed for use in the classroom which is transparent about the collection, storage and use of data. Students and staff using the platform should also resist including any personal information in questions asked for Generative AI platforms.

-

Child safety - Schools should consider using a platform which has been built and designed specifically for use in schools which protects users from accessing inappropriate information as well as improved privacy and security controls. Microsoft recently developed the first Generative AI chatbot for use in schools which was trialled in eight schools in South Australia.

-

Bias - AI can be prone to be algorithmically bias as it learns from the information that is provided by the user, which can be biased. Another example is, if it is repeatedly asked for discriminatory outcomes, it can produce answers which are biased. That is, it can learn a false truth.

-

Intellectual property and copyright law - AI can infringe copyright if it uses work, which is copyright protected, without permission. Additionally, AI programs may generate an output which resembles existing copyrighted works.

-

Directors' Duties - Most independent schools have a board responsible for governance.. The board will need to take an active role in developing the policy and ensure that they are consulting with industry experts in developing the school's framework.

Responsible use of AI in the classroom

In July 2023, the NSW Department of Education released a draft consultation paper for the Australian Framework for Generative Artificial Intelligence in Schools (the NSWDE Framework). At its core, the NSWDE Framework discusses the ethical considerations for the use of AI in classrooms and how they interact with current Australian laws. The NSWDE Framework outlines six core elements underpinning the safe and ethical use of generative AI tools in Australian schools:

-

Teaching and learning - Generative AI tools are used to enhance teaching and learning. Students should learn about generative AI tools and how they work including their potential limitations and biases.

-

Human and social wellbeing - Generative AI tools are used to benefit all members of the school community and should be used to explore diverse ideas and perspectives, avoiding the reinforcement of existing biases.

-

Transparency - Students, teachers, and schools need to understand how Generative AI tools work, when and how these tools are impacting them. Users should be informed about the function of the AI tool, why it is being used in the classroom, the implication of its use including risks, and how input data can influence outputs.

-

Fairness - Generative AI tools are used in ways that are accessible, fair and respectful.

-

Accountability - Generative AI tools are used in ways that are open to challenge and retain human agency and accountability for decisions. Generative AI in schools should always be used under human supervision with appropriate safeguards in place.

-

Privacy and security - Students and others using Generative AI tools must ensure they protect their privacy and data. This core element considers the importance of compliance with Australian privacy laws including, disclosing to students and parents the collection, use and storage of data collected by AI tools, protecting personal information input by students, and implementing cybersecurity measures against risks that may arise from the use of Generative AI.

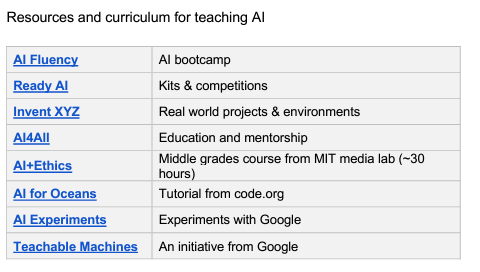

The ESA Framework provides some resources and curriculum which have been developed for teaching AI:

Conclusion

To navigate the legal issues surrounding AI in schools, we recommend schools adopt a multidisciplinary and proactive approach in developing their strategy for the use of AI in schools, including.

-

Engaging legal professionals to provide up to date advice on the development of AI regulations.

-

Establish an AI taskforce which includes at least one director who is responsible for the development, implementation and the regular review of the school's policies, practices and advices to teachers, students and parents.

-

Consider engaging a full time data protection officer who understands the regulatory framework and can lead your school's dynamic approach in the use of AI.

-

Upskill your teachers to become AI literate.

-

Develop a curriculum on the responsible use of AI, covering subjects such as ethics, data privacy, misinformation and critical thinking.

-

Develop an AI use policy for students and for staff members which is aligned with the school's other policies including its privacy and data protection policies.

This is commentary published by Colin Biggers & Paisley for general information purposes only. This should not be relied on as specific advice. You should seek your own legal and other advice for any question, or for any specific situation or proposal, before making any final decision. The content also is subject to change. A person listed may not be admitted as a lawyer in all States and Territories. © Colin Biggers & Paisley, Australia 2024.